AI Explainability in the Global South

The Digital Humanities Lab will host a virtual event as part of our new series on essential perspectives in ethical AI innovation! At this event, Dr. Chinasa T. Okolo will discuss her research on developing an inclusive praxis for emerging technology users, such as community health workers in low-resource healthcare settings.

As researchers and technology companies rush to develop artificial intelligence (AI) applications that address social impact problems in domains such as agriculture, education, and healthcare, Dr. Okolo argues that it is critical to consider the needs of users like community health workers (CHWs), who will be increasingly expected to operate tools that incorporate these technologies.

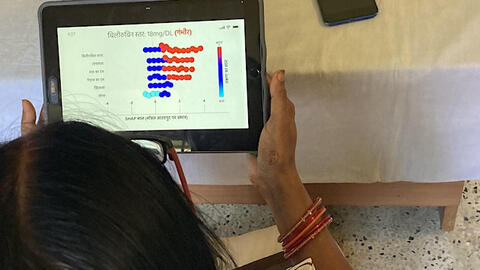

Dr. Okolo’s research shows that CHWs have low levels of AI knowledge, form incorrect mental models about how AI works, and at times, may trust algorithmic decisions more than their own. This is concerning, given that AI applications targeting the work of CHWs are already in active development, and early deployments in low-resource healthcare settings have already reported failures that created additional workflow inefficiencies and inconvenienced patients. Explainable AI (XAI) can help avoid such pitfalls, but nearly all prior work has focused on users that live in relatively resource-rich settings (e.g., the US and Europe) and who arguably have substantially more experience with digital technologies overall and AI systems in particular.

In her dissertation work, Dr. Okolo critically engages with CHWs and AI practitioners to investigate the feasibility of (X)AI in resource-constrained environments in the Global South and provide actionable recommendations for practitioners (designers, developers, researchers, etc.) interested in building tools accessible to users within these contexts.

Please register via Eventbrite to attend.